Introduction: (Crime Rate Prediction System using Python)

Criminals are nuisance for the society in all corners of world for a long time now and measures are required to eradicate crimes from our world. Our mission is to offer crime prevention application to keep public safe. Current policing strategies work towards finding the criminals, basically after the crime has occurred. But, with the help of technological advancement, we can use historic crime data to recognize crime patterns and use these patterns to predict crimes beforehand.

We are using clustering algorithms to predict crime prone areas. There are many clustering algorithms to group the relevant data into desired clusters. The large volumes of crime data-sets as well as the complexity of relationships between these kinds of data have made criminology an appropriate field for applying data mining techniques. Criminology is an area that focuses the scientific study of crime and criminal behavior and law enforcement and is a process that aims to identify crime characteristics. It is one of the most important fields where the application of data mining techniques can produce important results. Identifying crime characteristics is the first step for developing further analysis. The knowledge gained from data mining approaches is a very useful tool which can help and support police forces. Clustering techniques converts data-sets to clusters which are further examined for determining crime prone areas. These clusters visually represent group of crimes overlaid on map of police jurisdiction. Clusters store location of crimes along with other credentials of crime like type and time. These clusters are classified on the basis of their members. Densely populated clusters become crime prone areas whereas clusters with fewer members are ignored. Preventive measures are implemented according to crime type in crime prone areas.

K-means is the simplest and most commonly used clustering algorithm in scientific and industrial software. Due to less computational complexity, it is suitable for clustering large data sets. As such, it has been successfully used in various topics, including market segmentation, computer vision, geostatistics, astronomy and agriculture. It often is used as a pre-processing step for other algorithms, for example to find a starting configuration.

We chose clustering technique over any other supervised technique such as classification, since crimes vary in nature widely and crime database are often filled with unsolved crimes. Therefore, classification technique that will rely on the existing and known solved crimes, will not give good predictive quality for future crimes.

Data Mining

What is data mining?

Data mining is the computing process of discovering patterns in large data sets involving methods at the intersection of machine learning, statistics, and database systems. It is an essential process where intelligent methods are applied to extract data patterns.

Data mining is the computing process of discovering patterns in large data sets involving methods at the intersection of machine learning, statistics, and database systems. It is an essential process where intelligent methods are applied to extract data patterns. It is an interdisciplinary sub field of computer science. The overall goal of the data mining process is to extract information from a data set and transform it into an understandable structure for further use. Aside from the raw analysis step, it involves database and data management aspects, data per-processing, model and inference considerations, interesting metrics, complexity considerations, post-processing of discovered structures, visualization, and online updating. Data mining is the analysis step of the “knowledge discovery in databases” process, or KDD.

The knowledge discovery in databases (KDD) process is commonly defined with the stages:

- Selection

- Pr-processing

- Transformation

- Data mining

- Interpretation/evaluation

It exists, however, in many variations on this theme, such as the Cross Industry Standard Process for Data Mining (CRISP-DM) which defines six phases:

- Business Understanding

- Data Understanding

- Data Preparation

- Modeling

- Evaluation

- Deployment

Data mining involves six common classes of tasks:

- Anomaly detection (outlier/change/deviation detection) – The identification of unusual data records, that might be interesting or data errors that require further investigation.

-

Association rule learning (dependency modeling) – Searches for relationships between variables. For example, a supermarket might gather data on customer purchasing habits. Using association rule learning, the supermarket can determine which products are frequently bought together and use this information for marketing purposes. This is sometimes referred to as market basket analysis.

-

Clustering – is the task of discovering groups and structures in the data that are in some way or another “similar”, without using known structures in the data.

-

Classification – is the task of generalizing known structure to apply to new data. For example, an e-mail program might attempt to classify an e-mail as “legitimate” or as “spam”.

-

Regression – attempts to find a function which models the data with the least error that is, for estimating the relationships among data or data sets.

-

Summarization – providing a more compact representation of the data set, including visualization and report generation.

Data Mining Algorithm

- C4.5

- k-means

- Support vector machines

- Apriori

- EM

- PageRank

- AdaBoost

- kNN

- Naive Bayes

C4.4

What does it do? C4.5 constructs a classifier in the form of a decision tree. In order to do this, C4.5 is given a set of data representing things that are already classified.

Wait, what’s a classifier? A classifier is a tool in data mining that takes a bunch of data representing things we want to classify and attempts to predict which class the new data belongs to.

What’s an example of this? Sure, suppose a data set contains a bunch of patients. We know various things about each patient like age, pulse, blood pressure, VO2max, family history, etc. These are called attributes.Now:

Given these attributes, we want to predict whether the patient will get cancer. The patient can fall into 1 of 2 classes: will get cancer or won’t get cancer. C4.5 is told the class for each patient.

And here’s the deal:

Using a set of patient attributes and the patient’s corresponding class, C4.5 constructs a decision tree that can predict the class for new patients based on their attributes.

Cool, so what’s a decision tree? Decision tree learning creates something similar to a flowchart to classify new data. Using the same patient example, one particular path in the flowchart could be:

- Patient has a history of cancer

- Patient is expressing a gene highly correlated with cancer patients

- Patient has tumours

- Patient’s tumour size is greater than 5cm

The bottom line is:

At each point in the flowchart is a question about the value of some attribute, and depending on those values, he or she gets classified. You can find lots of examples of decision trees.

Is this supervised or unsupervised? This is supervised learning, since the training data set is labelled with classes. Using the patient example, C4.5 doesn’t learn on its own that a patient will get cancer or won’t get cancer. We told it first, it generated a decision tree, and now it uses the decision tree to classify.

You might be wondering how C4.5 is different than other decision tree systems.

- First, C4.5 uses information gain when generating the decision tree.

- Second, although other systems also incorporate pruning, C4.5 uses a single-pass pruning process to mitigate over-fitting. Pruning results in many improvements.

- Third, C4.5 can work with both continuous and discrete data. My understanding is it does this by specifying ranges or thresholds for continuous data thus turning continuous data into discrete data.

- Finally, incomplete data is dealt with in its own ways.

Why use C4.5? Arguably, the bestselling point of decision trees is their ease of interpretation and explanation. They are also quite fast, quite popular and the output is human readable.

Where is it used? A popular open-source Java implementation can be found over at OpenTox. Orange, an open-source data visualization and analysis tool for data mining, implements C4.5 in their decision tree classifier.

Support Vector Machines

What does it do? Support vector machine (SVM) learns a hyperplane to classify data into 2 classes. At a high-level, SVM performs a similar task like C4.5 except SVM doesn’t use decision trees at all.

Whoa, a hyper-what? A hyperplane is a function like the equation for a line, y = mx + b. In fact, for a simple classification task with just 2 features, the hyperplane can be a line.

As it turns out.

SVM can perform a trick to project your data into higher dimensions. Once projected into higher dimensions.SVM figures out the best hyperplane which separates your data into the 2 classes.

Do you have an example? Absolutely, the simplest example I found starts with a bunch of red and blue balls on a table. If the balls aren’t too mixed together, you could take a stick and without moving the balls, separate them with the stick.

You see:

When a new ball is added on the table, by knowing which side of the stick the ball is on, you can predict its color.

What do the balls, table and stick represent? The balls represent data points, and the red and blue color represents 2 classes. The stick represents the simplest hyperplane which is a line.

And the coolest part?

SVM figures out the function for the hyperplane.

What if things get more complicated? Right, they frequently do. If the balls are mixed together, a straight stick won’t work.

Here’s the work-around:

Quickly lift up the table throwing the balls in the air. While the balls are in the air and thrown up in just the right way, you use a large sheet of paper to divide the balls in the air.

You might be wondering if this is cheating:

Nope, lifting up the table is the equivalent of mapping your data into higher dimensions. In this case, we go from the 2 dimensional table surface to the 3 dimensional balls in the air.

How does SVM do this? By using a kernel we have a nice way to operate in higher dimensions. The large sheet of paper is still called a hyperplane, but it is now a function for a plane rather than a line. Note from Yuval that once we’re in 3 dimensions, the hyperplane must be a plane rather than a line.

How do balls on a table or in the air map to real-life data? A ball on a table has a location that we can specify using coordinates. For example, a ball could be 20cm from the left edge and 50cm from the bottom edge. Another way to describe the ball is as (x, y) coordinates or (20, 50). x and y are 2 dimensions of the ball.

Here’s the deal:

If we had a patient dataset, each patient could be described by various measurements like pulse, cholesterol level, blood pressure, etc. Each of these measurements is a dimension.

The bottom line is:

SVM does its thing, maps them into a higher dimension and then finds the hyperplane to separate the classes.

Margins are often associated with SVM? What are they? The margin is the distance between the hyperplane and the 2 closest data points from each respective class. In the ball and table example, the distance between the stick and the closest red and blue ball is the margin.

The key is:

SVM attempts to maximize the margin, so that the hyperplane is just as far away from red ball as the blue ball. In this way, it decreases the chance of misclassification.

Where does SVM get its name from? Using the ball and table example, the hyperplane is equidistant from a red ball and a blue ball. These balls or data points are called support vectors, because they support the hyperplane.

Is this supervised or unsupervised? This is a supervised learning, since a dataset is used to first teach the SVM about the classes. Only then is the SVM capable of classifying new data.

Why use SVM? SVM along with C4.5 are generally the 2 classifiers to try first. No classifier will be the best in all cases due to the No Free Lunch Theorem. In addition, kernel selection and interpretability are some weaknesses.

Where is it used? There are many implementations of SVM. A few of the popular ones are scikit-learn, MATLAB and of course libsvm.

Apriori

What does it do? The Apriori algorithm learns association rules and is applied to a database containing a large number of transactions.

What are association rules? Association rule learning is a data mining technique for learning correlations and relations among variables in a database.

What’s an example of Apriori? Let’s say we have a database full of supermarket transactions. You can think of a database as a giant spreadsheet where each row is a customer transaction and every column represents a different grocery item.

Here’s the best part:

By applying the Apriori algorithm, we can learn the grocery items that are purchased together a.k.a association rules.

The power of this is:

You can find those items that tend to be purchased together more frequently than other items — the ultimate goal being to get shoppers to buy more. Together, these items are called item sets.

For example:

You can probably quickly see that chips + dip and chips + soda seem to frequently occur together. These are called 2-itemsets. With a large enough dataset, it will be much harder to “see” the relationships especially when you’re dealing with 3-itemsets or more. That’s precisely what Apriori helps with!

You might be wondering how Apriori works? Before getting into the nitty-gritty of algorithm, you’ll need to define 3 things:

- The first is the size of your item set. Do you want to see patterns for a 2-itemset, 3-itemset, etc.?

- The second is your support or the number of transactions containing the item set divided by the total number of transactions. An item set that meets the support is called a frequent item set.

- The third is your confidence or the conditional probability of some item given you has certain other items in your item set. A good example is given chips in your item set; there is a 67% confidence of having soda also in the item set.

The basic Apriori algorithm is a 3 step approach:

Join. Scan the whole database for how frequent 1-itemsets are.

Prune. Those item sets that satisfy the support and confidence move onto the next round for 2-itemsets.

Repeat. This is repeated for each item set level until we reach our previously defined size.

Is this supervised or unsupervised? Apriori is generally considered an unsupervised learning approach, since it’s often used to discover or mine for interesting patterns and relationships.

Apriori can also be modified to do classification based on labelled data.

Why use Apriori? Apriori is well understood, easy to implement and has many derivatives.

On the other hand, the algorithm can be quite memory, space and time intensive when generating item sets.

Where is it used? Plenty of implementations of Apriori are available. Some popular ones are the AR tool, Weka, and Orange.

EM

What does it do? In data mining, expectation-maximization (EM) is generally used as a clustering algorithm (like k-means) for knowledge discovery.

In statistics, the EM algorithm iterates and optimizes the likelihood of seeing observed data while estimating the parameters of a statistical model with unobserved variables.

Here are a few concepts that will make this way easier.

What’s a statistical model? I see a model as something that describes how observed data is generated. For example, the grades for an exam could fit a bell curve, so the assumption that the grades are generated via a bell curve (a.k.a. normal distribution) is the model.

What’s a distribution? A distribution represents the probabilities for all measurable outcomes. For example, the grades for an exam could fit a normal distribution. This normal distribution represents all the probabilities of a grade.

In other words, given a grade, you can use the distribution to determine how many exam takers are expected to get that grade.

Cool, what are the parameters of a model? A parameter describes a distribution which is part of a model. For example, a bell curve can be described by its mean and variance.

Using the exam scenario, the distribution of grades on an exam (the measurable outcomes) followed a bell curve (this is the distribution). The mean was 85 and the variance was 100.

So, all you need to describe a normal distribution are 2 parameters:

- The mean

- The variance

Likelihood? Going back to our previous bell curve example… suppose we have a bunch of grades and are told the grades follow a bell curve. However, we’re not given all the grades… only a sample.

Here’s the deal:

We don’t know the mean or variance of all the grades, but we can estimate them using the sample. The likelihood is the probability that the bell curve with estimated mean and variance results in those bunch of grades.

In other words, given a set of measurable outcomes, let’s estimate the parameters. Using these estimated parameters, the hypothetical probability of the outcomes is called likelihood.

Remember, it’s the hypothetical probability of the existing grades, not the probability of a future grade.

You’re probably wondering, what’s probability then?

Using the bell curve example, suppose we know the mean and variance. Then we’re told the grades follow a bell curve. The chance that we observe certain grades and how often they are observed is the probability.

In more general terms, given the parameters, let’s estimate what outcomes should be observed. That’s what probability does for us.

Now, what’s the difference between observed and unobserved data? Observed data is the data that you saw or recorded. Unobserved data is data that is missing. There a number of reasons that the data could be missing (not recorded, ignored, etc.).

Here’s the kicker:

For data mining and clustering, what’s important to us is looking at the class of a data point as missing data. We don’t know the class, so interpreting missing data this way is crucial for applying EM to the task of clustering.

Once again: The EM algorithm iterates and optimizes the likelihood of seeing observed data while estimating the parameters of a statistical model with unobserved variables. Hopefully, this is way more understandable now.

By optimizing the likelihood, EM generates an awesome model that assigns class labels to data points — sounds like clustering!

How does EM help with clustering? EM begins by making a guess at the model parameters.

Then it follows an iterative 3-step process:

- E-step: Based on the model parameters, it calculates the probabilities for assignments of each data point to a cluster.

- M-step: Update the model parameters based on the cluster assignments from the E-step.

- Repeat until the model parameters and cluster assignments stabilize (a.k.a. convergence).

Is this supervised or unsupervised? Since we do not provide labelled class information, this is unsupervised learning.

Why use EM? A key selling point of EM is it’s simple and straight-forward to implement. In addition, not only can it optimize for model parameters, it can also iteratively make guesses about missing data.

This makes it great for clustering and generating a model with parameters. Knowing the clusters and model parameters, it’s possible to reason about what the clusters have in common and which cluster new data belongs to.

First, EM is fast in the early iterations, but slow in the later iterations.

Second, EM doesn’t always find the optimal parameters and gets stuck in local optima rather than global optima.

Where is it used? The EM algorithm is available in Weka. R has an implementation in the mclust package. Scikit-learn also have an implementation in its gmm module.

PageRank:

What does it do? PageRank is a link analysis algorithm designed to determine the relative importance of some object linked within a network of objects.

What’s link analysis? It’s a type of network analysis looking to explore the associations (a.k.a. links) among objects.

Here’s an example: The most prevalent example of PageRank is Google’s search engine. Although their search engine doesn’t solely rely on PageRank, it’s one of the measures Google uses to determine a web page’s importance.

Let me explain:

Web pages on the World Wide Web link to each other. If rayli.net links to a web page on CNN, a vote is added for the CNN page indicating rayli.net finds the CNN web page relevant.

And it doesn’t stop there…

rayli.net’s votes are in turn weighted by rayli.net’s importance and relevance. In other words, any web page that’s voted for rayli.net increases rayli.net’s relevance.

The bottom line?

This concept of voting and relevance is PageRank. rayli.net’s vote for CNN increases CNN’s PageRank and the strength of rayli.net’s PageRank influences how much its vote affects CNN’s PageRank.

What does a PageRank of 0, 1, 2, 3, etc. mean? Although the precise meaning of a PageRank number isn’t disclosed by Google, we can get a sense of its relative meaning.

And here’s how:

It’s a bit like a popularity contest. We all have a sense of which websites are relevant and popular in our minds.

What other applications are there of PageRank? PageRank was specifically designed for the World Wide Web.

Think about it:

At its core, PageRank is really just a super effective way to do link analysis. The objects being linked don’t have to be web pages.

Here are 3 innovative applications of PageRank:

- Dr Stefano Allesina, from the University of Chicago, applied PageRank to ecology to determine which species are critical for sustaining ecosystems.

- Twitter developed WTF (Who-to-Follow) which is a personalized PageRank recommendation engine about who to follow.

- Bin Jiang, from The Hong Kong Polytechnic University, used a variant of PageRank to predict human movement rates based on topographical metrics in London.

Is this supervised or unsupervised? PageRank is generally considered an unsupervised learning approach, since it’s often used to discover the importance or relevance of a web page.

Why use PageRank? Arguably, the main selling point of PageRank is its robustness due to the difficulty of getting a relevant incoming link.

Simply stated:

If you have a graph or network and want to understand relative importance, priority, ranking or relevance, give PageRank a try.

Where is it used? The PageRank trademark is owned by Google. However, the PageRank algorithm is actually patented by Stanford University.

You might be wondering if you can use PageRank:

I’m not a lawyer, so best to check with an actual lawyer, but you can probably use the algorithm as long as it doesn’t commercially compete against Google/Stanford.

Here are 3 implementations of PageRank:

- C++ Open Source PageRank Implementation

- Python PageRank Implementation

- igraph – The network analysis package (R)

AdaBoost :

What does it do? AdaBoost is a boosting algorithm which constructs a classifier.

As you probably remember, a classifier takes a bunch of data and attempts to predict or classify which class a new data element belongs to.

But what’s boosting? Boosting is an ensemble learning algorithm which takes multiple learning algorithms (e.g. decision trees) and combines them. The goal is to take an ensemble or group of weak learners and combine them to create a single strong learner.

What’s the difference between a strong and weak learner? A weak learner classifies with accuracy barely above chance. A popular example of a weak learner is the decision stump which is a one-level decision tree.

A strong learner has much higher accuracy, and an often used example of a strong learner is SVM.

What’s an example of AdaBoost? Let’s start with 3 weak learners. We’re going to train them in 10 rounds on a training dataset containing patient data. The dataset contains details about the patient’s medical records.

How can we predict whether the patient will get cancer?

In round 1: AdaBoost takes a sample of the training dataset and tests to see how accurate each learner is. The end result is we find the best learner.

In addition, samples that are misclassified are given a heavier weight, so that they have a higher chance of being picked in the next round.

One more thing, the best learner is also given a weight depending on its accuracy and incorporated into the ensemble of learners (right now there’s just 1 learner).

In round 2: AdaBoost again attempts to look for the best learner.

And here’s the kicker:

The sample of patient training data is now influenced by the more heavily misclassified weights. In other words, previously misclassified patients have a higher chance of showing up in the sample.

Why?

It’s like getting to the second level of a video game and not having to start all over again when your character is killed. Instead, you start at level 2 and focus all your efforts on getting to level 3.

Likewise, the first learner likely classified some patients correctly. Instead of trying to classify them again, let’s focus all the efforts on getting the misclassified patients.

The best learner is again weighted and incorporated into the ensemble, misclassified patients are weighted so they have a higher chance of being picked and we rinse and repeat.

At the end of the 10 rounds: We’re left with an ensemble of weighted learners trained and then repeatedly retrained on misclassified data from the previous rounds.

Is this supervised or unsupervised? This is supervised learning, since each iteration trains the weaker learners with the labelled dataset.

Why use AdaBoost? AdaBoost is simple. The algorithm is relatively straight-forward to program.

In addition, it’s fast! Weak learners are generally simpler than strong learners. Being simpler means they’ll likely execute faster.

It’s a super elegant way to auto-tune a classifier, since each successive AdaBoost round refines the weights for each of the best learners. All you need to specify is the number of rounds.

Finally, it’s flexible and versatile. AdaBoost can incorporate any learning algorithm, and it can work with a large variety of data.

Where is it used? AdaBoost has a ton of implementations and variants. Here are a few:

- scikit-learn

- ICSIBoost

- gbm: Generalized Boosted Regression Models

kNN

What does it do?KNN, or k-Nearest Neighbours, is a classification algorithm. However, it differs from the classifiers previously described because it’s a lazy learner.

What’s a lazy learner? A lazy learner doesn’t do much during the training process other than store the training data. Only when new unlabelled data is input does this type of learner look to classify.

On the other hand, an eager learner builds a classification model during training. When new unlabelled data is input, this type of learner feeds the data into the classification model.

How do C4.5, SVM and AdaBoost fit into this? Unlike kNN, they are all eager learners.

Here’s why:

- 5 builds a decision tree classification model during training.

- SVM builds a hyperplane classification model during training.

- AdaBoost builds an ensemble classification model during training.

So what does kNN do? kNN builds no such classification model. Instead, it just stores the labelled training data.

When new unlabelled data comes in, kNN operates in 2 basic steps:

- First, it looks at the k closest labelled training data points — in other words, the k-nearest neighbours.

- Second, using the neighbours’ classes, kNN gets a better idea of how the new data should be classified.

How does kNN figure out what’s closer? For continuous data, kNN uses a distance metric like Euclidean distance. The choice of distance metric largely depends on the data. Some even suggest learning a distance metric based on the training data. There’s tons more details and papers on kNN distance metrics.

For discrete data, the idea is transform discrete data into continuous data. 2 examples of this are:

- Using Hamming distance as a metric for the “closeness” of 2 text strings.

- Transforming discrete data into binary features.

These 2 Stack Overflow threads have some more suggestions on dealing with discrete data:

- KNN classification with categorical data

- Using k-NN in R with categorical values

How does kNN classify new data when neighbours disagree?kNN has an easy time when all neighbours are the same class. The intuition is if all the neighbours agree, then the new data point likely falls in the same class.

How does kNN decide the class when neighbours don’t have the same class?

2 common techniques for dealing with this are:

- Take a simple majority vote from the neighbours. Whichever class has the greatest number of votes becomes the class for the new data point.

- Take a similar vote except give a heavier weight to those neighbours that are closer. A simple way to do this is to use reciprocal distance e.g. if the neighbour is 5 units away, then weight its vote 1/5. As the neighbour gets further away, the reciprocal distance gets smaller and smaller… exactly what we want!

Is this supervised or unsupervised? This is supervised learning, since kNN is provided a labelled training dataset.

Why use kNN? Ease of understanding and implementing are 2 of the key reasons to use kNN. Depending on the distance metric, kNN can be quite accurate.

- kNN can get very computationally expensive when trying to determine the nearest neighbours on a large dataset.

- Noisy data can throw off kNN classifications.

- Features with a larger range of values can dominate the distance metric relative to features that have a smaller range, so feature scaling is important.

- Since data processing is deferred, kNN generally requires greater storage requirements than eager classifiers.

- Selecting a good distance metric is crucial to kNN’s accuracy.

Where is it used? A number of kNN implementations exist:

- MATLAB k-nearest neighbour classification

- scikit-learn KNeighboursClassifier

- k-Nearest Neighbour Classification in R

Naive Bayes

What does it do? Naive Bayes is not a single algorithm, but a family of classification algorithms that share one common assumption:

Every feature of the data being classified is independent of all other features given the class.

What does independent mean? 2 features are independent when the value of one feature has no effect on the value of another feature.

For example:

Let’s say you have a patient dataset containing features like pulse, cholesterol level, weight, height and zip code. All features would be independent if the value of all features has no effect on each other. For this dataset, it’s reasonable to assume that the patient’s height and zip code are independent, since a patient’s height has little to do with their zip code.

Here are 3 feature relationships which are not independent:

- If height increases, weight likely increases.

- If cholesterol level increases, weight likely increases.

- If cholesterol level increases, pulse likely increases as well.

The features of a dataset are generally not all independent.

And that ties in with the next question…

Why is it called naive? The assumption that all features of a dataset are independent is precisely why it’s called naive — it’s generally not the case that all features are independent.

What’s Bayes? Thomas Bayes was an English statistician for which Bayes’ Theorem is named after. You can click on the link to find about more about Bayes’ Theorem.

In a nutshell, the theorem allows us to predict the class given a set of features using probability.

The simplified equation for classification looks something like this:

What does the equation mean? The equation finds the probability of Class A given Features 1 and 2. In other words, if you see Features 1 and 2, this is the probability the data is Class A.

The equation reads: The probability of Class A given Features 1 and 2 is a fraction.

The fraction’s numerator is the probability of Feature 1 given Class A multiplied by the probability of Feature 2 given Class A multiplied by the probability of Class A.

The fraction’s denominator is the probability of Feature 1 multiplied by the probability of Feature 2.

What is an example of Naive Bayes?

- We have a training dataset of 1,000 fruits.

- The fruit can be a Banana, Orange or Other (these are the classes).

- The fruit can be Long, Sweet or yellow (these are the features).

What do you see in this training dataset?

- Out of 500 bananas, 400 are long, 350 are sweet and 450 are yellow.

- Out of 300 oranges, none are long, 150 are sweet and 300 are yellow.

- Out of the remaining 200 fruit, 100 are long, 150 are sweet and 50 are yellow.

If we are given the length, sweetness and colour of a fruit (without knowing its class), we can now calculate the probability of it being a banana, orange or other fruit.

Suppose we are told the unknown fruit is long, sweet and yellow.

Here’s how we calculate all the probabilities in 4 steps:

Step 1: To calculate the probability the fruit is a banana, let’s first recognize that this looks familiar. It’s the probability of the class Banana given the features Long, Sweet and Yellow or more succinctly:

This is exactly like the equation discussed earlier.

Step 2: Starting with the numerator, let’s plug everything in.

Multiplying everything together (as in the equation), we get:

Step 3: Ignore the denominator, since it’ll be the same for all the other calculations.

Step 4: Do a similar calculation for the other classes:

Since the is greater than , Naive Bayes would classify this long, sweet and yellow fruit as a banana.

Is this supervised or unsupervised? This is supervised learning, since Naive Bayes is provided a labelled training dataset in order to construct the tables.

Why use Naive Bayes? As you could see in the example above, Naive Bayes involves simple arithmetic. It’s just tallying up counts, multiplying and dividing.

Once the frequency tables are calculated, classifying an unknown fruit just involves calculating the probabilities for all the classes, and then choosing the highest probability.

Despite its simplicity, Naive Bayes can be surprisingly accurate. For example, it’s been found to be effective for spam filtering.

Where is it used? Implementations of Naive Bayes can be found in Orange, scikit-learn, Weka and R.

k means Clustering

What does it do? K-means creates k groups from a set of objects so that the members of a group are more similar. It’s a popular cluster analysis technique for exploring a data set.

Hang on, what’s cluster analysis? Cluster analysis is a family of algorithms designed to form groups such that the group members are more similar versus non-group members. Clusters and groups are synonymous in the world of cluster analysis.

Is there an example of this? Definitely, suppose we have a dataset of patients. In cluster analysis, these would be called observations. We know various things about each patient like age, pulse, blood pressure, VO2max, cholesterol, etc. This is a vector representing the patient.

Look:

You can basically think of a vector as a list of numbers we know about the patient. This list can also be interpreted as coordinates in multi-dimensional space. Pulse can be one dimension, blood pressure another dimension and so forth.

You might be wondering:

Given this set of vectors, how do we cluster together patients that have similar age, pulse, blood pressure, etc?

Want to know the best part?

You tell k-means how many clusters you want. K-means takes care of the rest.

How does k-means take care of the rest? K-means has lots of variations to optimize for certain types of data.

At a high level, they all do something like this:

- K-means picks points in multi-dimensional space to represent each of the k clusters. These are called centroids.

- Every patient will be closest to 1 of these k centroids. They hopefully won’t all be closest to the same one, so they’ll form a cluster around their nearest centroid.

- What we have are k clusters, and each patient is now a member of a cluster.

- K-means then finds the center for each of the k clusters based on its cluster members (yep, using the patient vectors!).

- This center becomes the new centroid for the cluster.

- Since the centroid is in a different place now, patients might now be closer to other centroids. In other words, they may change cluster membership.

- Steps 2-6 are repeated until the centroids no longer change and the cluster memberships stabilize. This is called convergence.

Is this supervised or unsupervised? It depends, but most would classify k-means as unsupervised. Other than specifying the number of clusters, k-means “learns” the clusters on its own without any information about which cluster an observation belongs to. k-means can be semi-supervised.

Why use k-means? I don’t think many will have an issue with this:

The key selling point of k-means is its simplicity. Its simplicity means it’s generally faster and more efficient than other algorithms, especially over large datasets.

It gets better:

K-means can be used to pre-cluster a massive data set followed by a more expensive cluster analysis on the sub-clusters. K-means can also be used to rapidly “play” with k and explore whether there are overlooked patterns or relationships in the data set.

It’s not all smooth sailing:

Two key weaknesses of k-means are its sensitivity to outliers, and its sensitivity to the initial choice of centroids. One final thing to keep in mind is k-means is designed to operate on continuous data — you’ll need to do some tricks to get it to work on discrete data.

Where is it used? A ton of implementations for k-means clustering are available online:

Description

Given a set of observations (x1, x2, …, xn), where each observation is a d-dimensional real vector, k-means clustering aims to partition the n observations into k (≤ n) sets S = {S1, S2, …, Sk} so as to minimize the within-cluster sum of squares (WCSS) (i.e. variance). Formally, the objective is to find:

Where μi is the mean of points in Si. This is equivalent to minimizing the pairwise squared deviations of points in the same cluster:

The Equivalence can be deduced from identity.

Algorithm :

Standard algorithm

The most common algorithm uses an iterative refinement technique. Due to its ubiquity it is often called the k-means algorithm; it is also referred to as Lloyd’s algorithm, particularly in the computer science community.

Given an initial set of k means m1(1),…,mk(1) (see below), the algorithm proceeds by alternating between two steps:

Assignment step: Assign each observation to the cluster whose mean has the least squared Euclidean distance, this is intuitively the “nearest” mean.(Mathematically, this means partitioning the observations according to the Voronoi diagram generated by the means).

Where each xp{\displaystyle x_{p}} is assigned to exactly one {\displaystyle S^{(t)}}S(t), even if it could be assigned to two or more of them.

Update step: Calculate the new means to be the centroids of the observations in the new clusters.

The algorithm has converged when the assignments no longer change. There is no guarantee that the optimum is found using this algorithm.

The algorithm is often presented as assigning objects to the nearest cluster by distance. Using a different distance function other than (squared) Euclidean distance may stop the algorithm from converging. Various modifications of k-means such as spherical k-means and k-medoids have been proposed to allow using other distance measures.

Initialization Methods

Commonly used initialization methods are Forgy and Random Partition. The Forgy method randomly chooses k observations from the data set and uses these as the initial means. The Random Partition method first randomly assigns a cluster to each observation and then proceeds to the update step, thus computing the initial mean to be the centroid of the cluster’s randomly assigned points. The Forgy method tends to spread the initial means out, while Random Partition places all of them close to the center of the data set. According to Hamerly et al., the Random Partition method is generally preferable for algorithms such as the k-harmonic means and fuzzy k-means. For expectation maximization and standard k-means algorithms, the Forgy method of initialization is preferable. A comprehensive study by Celebi et al., however, found that popular initialization methods such as Forgy, Random Partition, and Maximin often perform poorly, whereas the approach by Bradley and Fayyad performs “consistently” in “the best group” and K-means++ performs “generally well”.

K-Means Algorithm

K-Means clustering investigation plans to partition n perceptions into k bunch during which each perception includes a place with the bunch with the nearest centroid.

Process

1) Initially, the number of clusters must be known let it be k.

2) The initial step is to choose a set of K instances as centres of the clusters.

3) Next, the algorithm considers each instance and assigns it to the cluster which is closest.

4) The cluster centroids are recalculated either after whole cycle of re-assignment or each instance assignment.

5) This process is iterated.

K means algorithm complexity is O(tkn), where n is instances, c is clusters, and t is iterations and relatively efficient. It often terminates at a local optimum. Its disadvantage is applicable only when mean is defined and need to specify c, the number of clusters, in advance. It unable to handle noisy data and outliers and not suitable to discover clusters with non-convex shapes.

OBJECTIVE

To introduce a system by which crime rate can be reduced by analyzing the crime data.

Approach

- As a problem-driven project, a significant portion of this project will include implementing various regressions algorithms. The first challenge in this problem will be feature extraction. PCA and other methods to analyse correlation between features we extract, as well as simple data exploration methodologies (such as analysing trends over-time, etc.) will be helpful for feature prediction.

- For ML models, we will begin with simple linear regression (Bayesian) on the features we ultimately extract. We expect numerous features, so we will need to enforce sparsity via regularization or some form of dimensionality reduction. Ultimately we would like to try to implement a neural network, specifically a recurrent neural network, for practice and to see if they lead to improved performance. Our understanding is that RNNs are good for capturing patterns in time series data, which is how this data is structured. In that case we would implement the neural net in Torch. Beyond that, we will need to explore various other regression models to determine what is appropriate for the data and context. If time allows, we would also like to explore other models typically good for time series data, such as Hidden Markov Models or Gaussian Process, though we’re somewhat unfamiliar with both.

- In the end, we will likely need to develop a more sophisticated model that more fully captures the dynamics of crime. For example, some research has been done into the mutually excitatory nature of crime, e.g. crime in one area causes surrounding areas to be more susceptible to crime. These types of modelling enhancements may lead to better predictive power. We expect to spend a good amount of time tweaking different model ideas, and measuring their performance.

PROPOSED SYSTEM & ARCHITECTURE

After literature review there is need to use an open source data mining tool which can be implemented easily and analysis can be done easily. So here crime analysis is done on crime dataset by applying k means clustering algorithm using rapid miner tool.

The procedure is given below:

- First we take crime data set.

- Filter data set according to requirement and create new data set which has attributed according to analysis to be done.

- Open rapid miner tool and read excel file of crime dataset and apply “Replace Missing value operator” on it and execute operation.

- Perform “Normalize operator” on resultant dataset and execute operation.

- Perform k means clustering on resultant dataset formed after normalization and execute operation.

- From plot view of result plot data between crimes and get required cluster.

- Analysis can be done on cluster formed.

Data Flow Diagram

ER Diagram

Requirements of Project

- Linux operating System

- Python 2.7

- Flask Framework

- Flask wtForms

- Flask Mysqldb

- Numpy

- Flask Mail

- SciPy

- Scikit-learn

Steps for setup:

- First install any Linux operating system

- Install the Python from the link Python Download

- Open the terminal (Internet must be required)

- To install flask framework type the following command

- pip install Flask

- To install flask wt-forms type the following command

- pip install Flask-WTF

- To install flask MySQL type the following command

- pip install flask-mysql

- To install flask Mail type the following command

- pip install Flask-Mail

- To install Passlib type the following command

- pip install passlib

- To install Numpy type the following command

- pip install python-numpy

- To install SciPy type the following command

- python -m install scipy

- To install Scikit-learn type the following command

- pip install -U scikit-learn

- To install flask framework type the following command

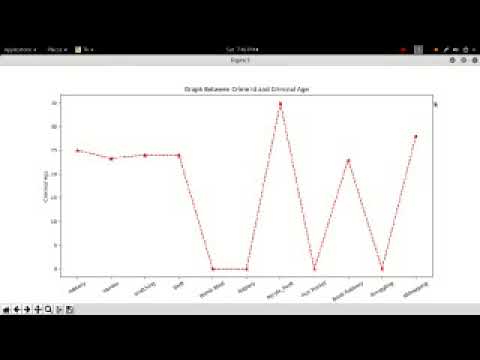

Screenshots of Proposed System

ADVANTAGES, DISADVANTAGES & FUTURE SCOPE

Advantages

- Helps to prevent crime in society

- System will keep historical record of crime.

- System is user friendly

- Saves time

Disadvantages

- Users who don’t have internet connection can’t access the system.

- Users must enter correct records otherwise system will provide wrong information.

Crime Rate Prediction System Help Go Here

In my opinion you commit an error. I can prove it. Write to me in PM.

I congratulate, this brilliant idea is necessary just by the way